Org Structural Innovation with 4 Examples

⏱️ Time to read: 40 minutes

Part 2

This post is part 2 of a series.

Part 1 here: Agentic Coding Slot Machines – Did We Just Summon a Genie Addiction?

What's in Store

In Part 1 we explored how Agentic Coding just flipped software on its head, chainsaws for the mind, infinite slot machines, Claude Max, Cursor, and vibe-coding 4 projects at once from the pool.

What you’ll learn in this post:

- Why old org charts are

breaking downunder AI leverage - Ethan Mollick's

"Leadership → Crowd → Lab"blueprint for orgs - How

Shopify,Answer.AI,Cursor&Googleare going AI first - Why

high agencyis the newcheat code Overemploymentthe Stanford study bombshell andSoham- Why the Nords

mission controlmilitary structure beats the Dutch in adaptation

AI First

In a recent interview on the Strange Loop Podcast, titled: Every leader needs this AI strategy, Wharton professor and leading AI researcher Ethan Mollick (@emollick) gave some really excellent advice based on lots of real world interviews and research of companies big and small.

He says that: In a study at Procter and Gamble:

individuals working alone with AI performed as well as teams

If you consider the ability for individuals to achieve 80% of any skill in parallel with an agent, via 4 x Claude Slot Machines versus the old way of human brain-to-voice-to-brain syncing of "hopping on a call", you can see why.

Ethan goes on to say that teams:

that work with AI were much more likely to come up with really breakthrough ideas.

He points out that the first org chart was created in 1855 for the New York and Erie Railroad as a solution to a problem of coordinating physical assets (trains) with a new technology (the telegraph).

Even breakthroughs like Henry Ford's production lines and early 2000s agile development:

broke because they all depended on there being only one form of intelligence available which is human.

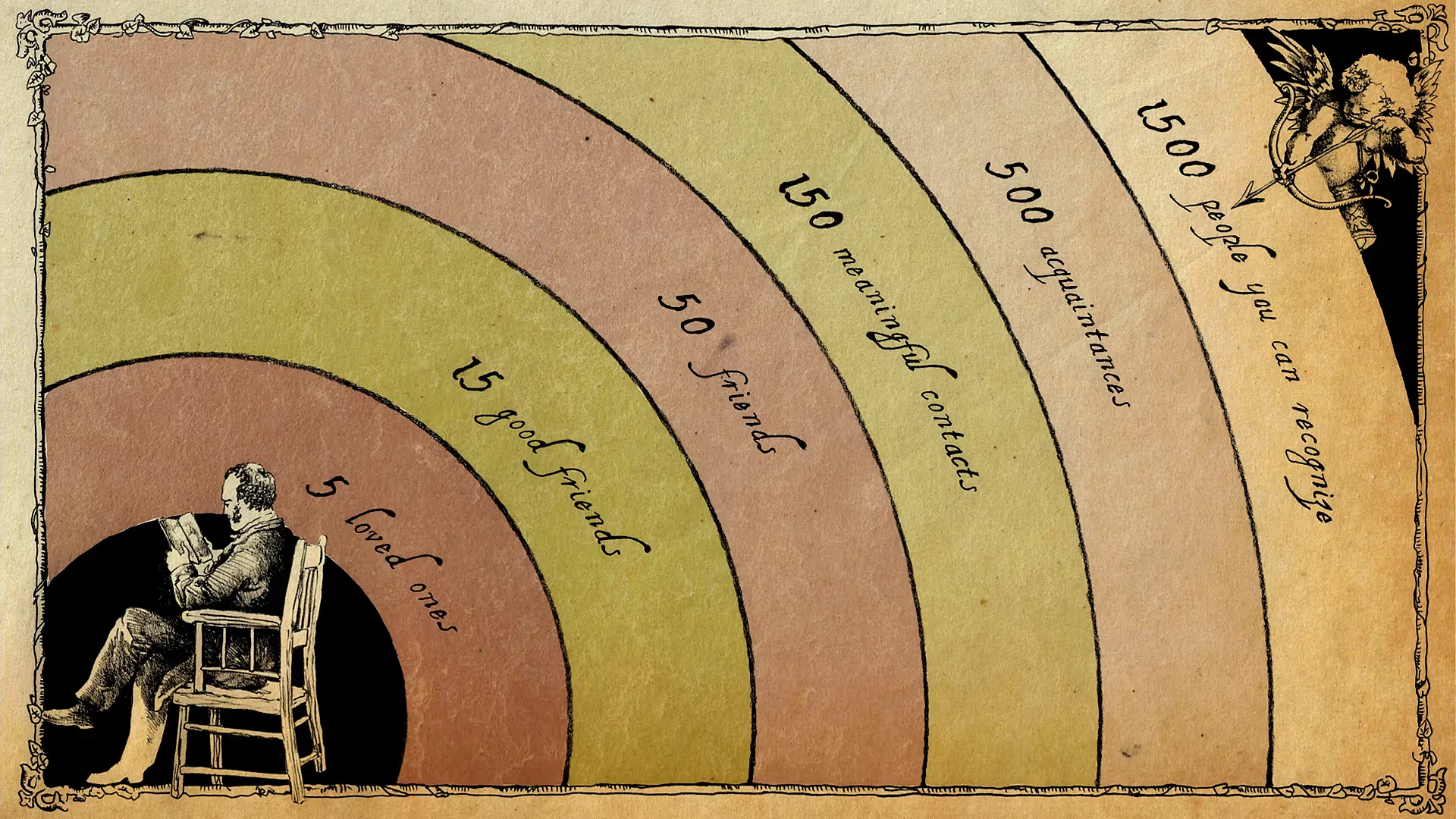

In the old world where intelligence comes in humansized packages you must organize around the span of control of five or seven people (the innie ring of Dunbars number is 5).

dunbars number proposes a cognitive limit on how many people you can keep tabs on

However, those constraints no longer hold.

Everything has changed.

There are clear implications of using organizational methodologies from 1855 in this AI first world; and Ethan goes on to lament that organizational innovation is a lost art:

modern western companies have given up on organizational innovation as something that they do.

The Leadership, Crowd, Lab Model

One way Ethan suggests existing organizations can find their way, is to adopt a three step model he calls: Leadership -> Crowd -> Lab.

- Leadership sets the safety / direction

It starts with leadership having to resolve the natural conflict between an employee discovering they can automate their job and the benefits of them sharing said efficiency gains with their manager.

From the employee's perspective he points out:

why would I ever want to share my productivity benefits with the organization without being compensated

To solve, he says; you must adopt an attitude of:

We won't fire people for this. We'll grow bigger.

You can build tools and techniques that are really useful, but ultimately it has to be people in the company who figure out if something is good or bad.

They're the ones with the experience and evidence to do it. If they're terrified of making those judgments because they might get fired, punished for using AI, or replaced if there's an efficiency gain, they'll never show you an efficiency gain.

He goes on to ask:

how do you create the incentive so they share what they're doing, right?

As an example he says one company they studied gave out $10,000 cash prizes at the end of every week to whoever did the best job automating their job.

- Crowd means let everyone explore AI tools

Ethan says estimates are only 1-2% of employees are AI naturals.

And then you'll find like one or two percent of your organization is just brilliant at this stuff. They're amazing at it. Those are the people who will be able to lead you in your AI development effort.

He suggests rolling out the tooling maximally to everyone and handpicking your internal high agency people:

those become the people that become the center of your lab and figure out how to use it.

- Build Labs means rethinking KPIs

Ethan then suggests orgs build internal labs to scale discoveries by putting their best AI capable talent to work: building tools and workflows to supersize everyone else.

Since we are in uncharted territory, Ethan says orgs need to pivot to a different way to orient goals and measure success than traditional KPIs:

in the early R&D phase, the worst thing you do is have a bunch of KPIs, right. I really worry about KPIs, measurable KPIs being doom.

Instead he emphasizes the key to operating a lab type environment is the freedom to explore.

Maximalism

Another thing Ethan talks about is not holding back.

Be Maximalist, not Incremental. I think that the thing to aim is to be maximalist. I think too few organizations are maximalist. Just push the system to do everything.

An example of being maximalist he gives is:

Every ideation session you stop in the middle of meetings and you ask AI how's it going so far or whether or not you should continue the meeting at all.

On Hiring, Ethan advises:

Before you hired anyone, you needed to show um you need to spend two hours as a team trying to do the job with AI and then rewrite the job description around the fact that AI would be used.

Choose a Path

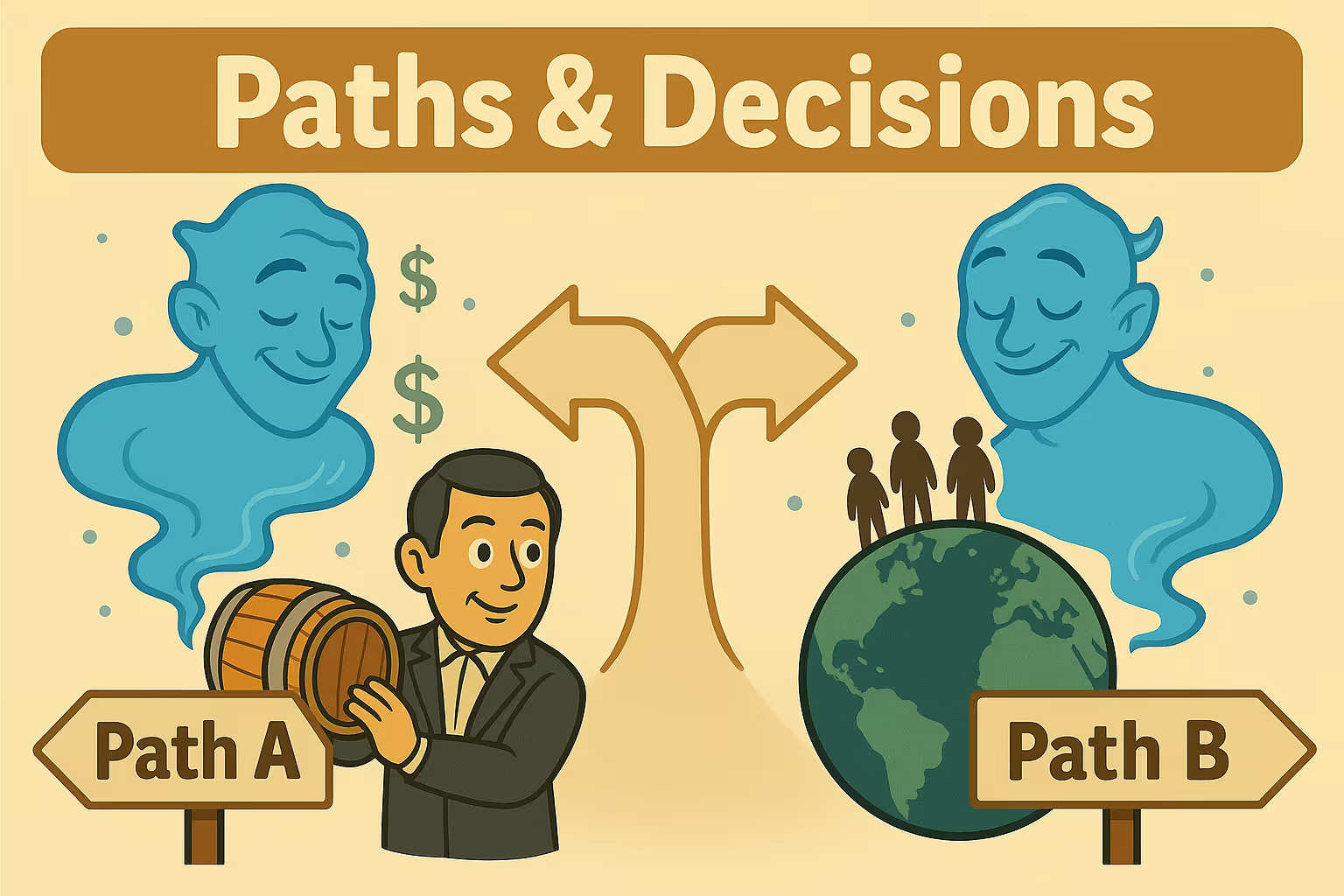

Ethan says, with all these efficiency gains, orgs must make a key decision:

Path A

increase efficiency and make more money per barrel of ale

Path B

expand reach and be Guinness and hire 100,000 people and expand worldwide

It's critical to Choose a Path and communicate to your org which path you are taking.

Hire Experts

Something interesting Ethan discusses is their findings that AI disproportionately improves the capabilities of experts rather than juniors.

The problem with juniors is they faithfully copy and paste what the AI says. It's the experts who can rapidly take the 80% correct and then fix the 20% wrong and know intuatively when to integrate the novel and exceptional ideas that AI surfaces.

Human Expertise is More Valuable, Not Less:

It turns out expertise actually is really good. None of these systems are as good as an actual expert at the top of their fields.

Ethics of Efficiency Gains

Recently, several stories have been trending online about overemployment.Ethan's talk raises an interesting question around the social contract between employee and employer.

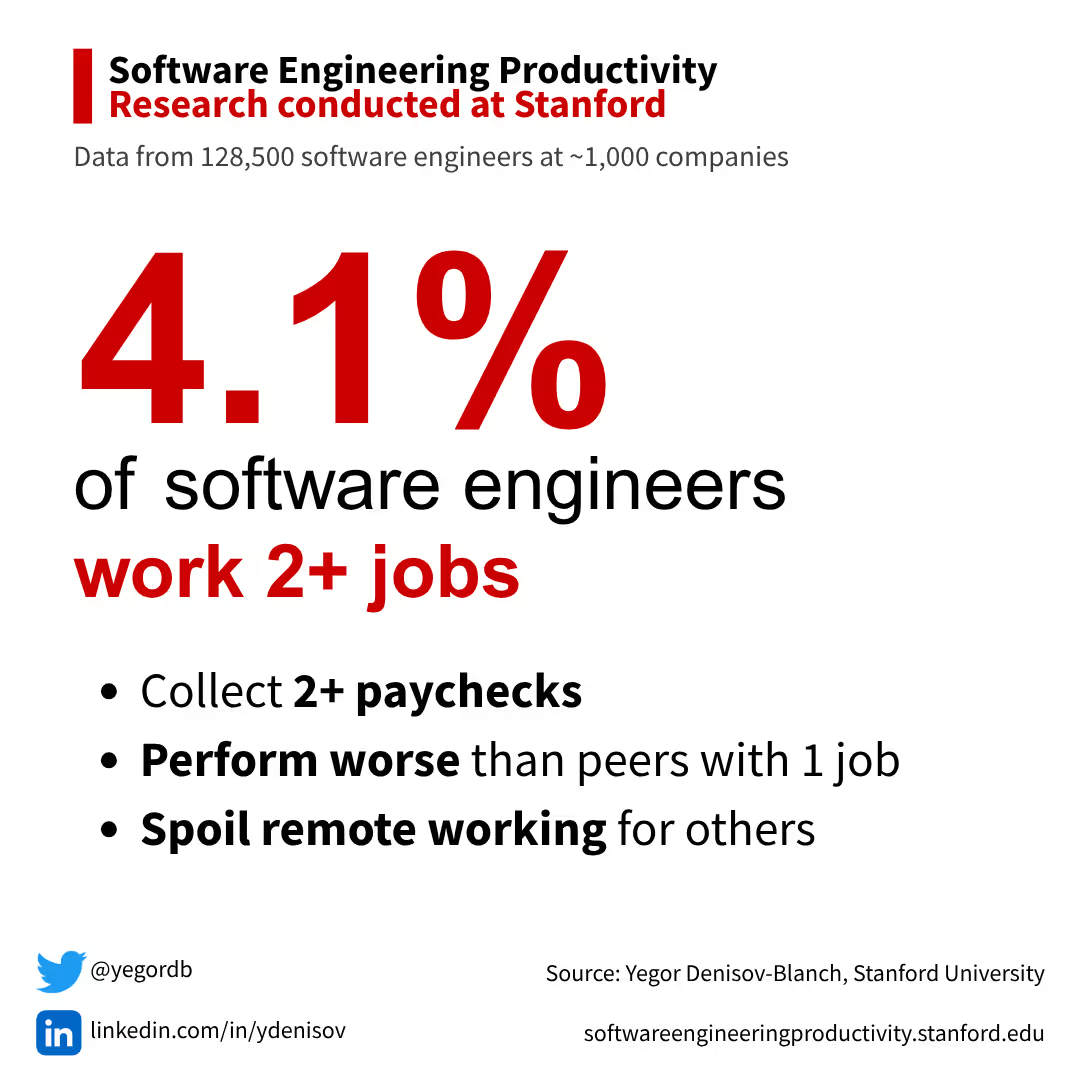

Noteably, a recent study published from the Software Engineering Productivity group at Stanford, of 100K+ engineers at ~1,000 companies showed that 4.1% (1 in 20) engineers are overemployed (most likely in secret).

It's no wonder the internet was on fire on 4th of July weekend over the Soham story, with orgs waking up to discover Soham had red teamed their hiring process.

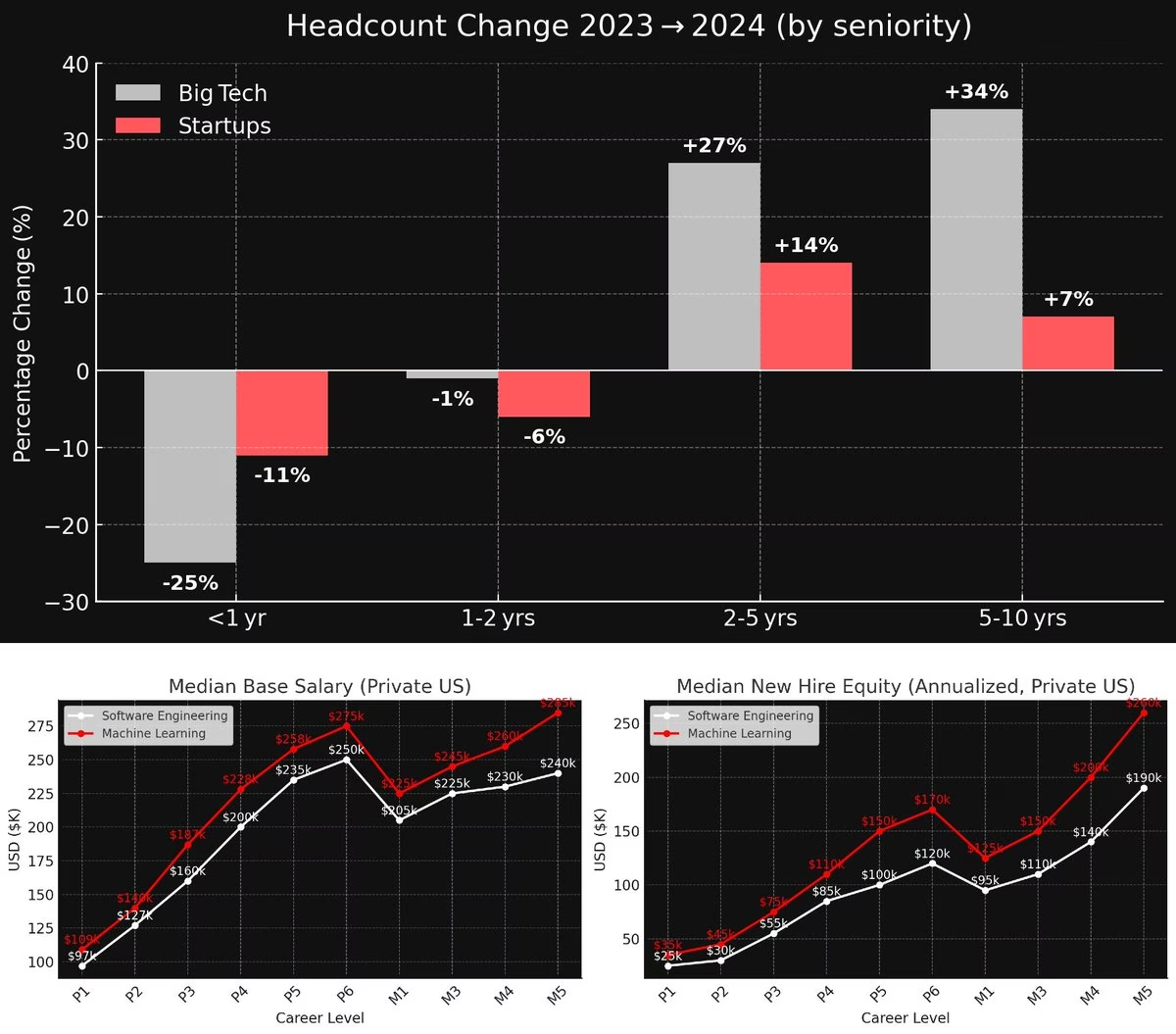

Interestingly Deedy (@deedydas) also reported recently that AI Engineering salaries have gone up 20% and orgs are staffing heavier with senior talent.

Tech hiring is not the same as it was even a year ago.

While lying to an employer is a form of financial infidelity and potentially illegal, the fact that thousands of people on reddit claim to be overemployed by leveraging automation tools and AI, suggests that the productivity gains of AI and automation are not being evenly shared from org to employee.

To Ethans point, it's the organizations responsibility to honestly reckon with this dramatic shift in capital / human resource allocation.

If AI can supersize experts should they be financially rewarded more for doing so?

As AI continues to reshape what's possible, it might be time to renegotiate the social contract around productivity and decide who truly benefits from these leaps.

Especially with regards to fairly compensating individuals for all the training data that went into these tools, including all the code on GitHub!

4 Examples

Over the last few months, I have read and listened to a variety of articles (tweets lol) and podcasts covering a bunch of different teams and orgs and how they operate.

Lets take a look at what 4 different orgs are doing right now and how that lines up with advice from Ethan above.

Shopify let's Crafters Cook

Shopify is absolutely on fire 🔥 right now. If anyone is espousing Ethan's suggestion of radical organizational innovation, its Shopify.

They have gone AI first by rolling out AI tools to everyone in their org, and restructured their management to be bottom up servant style.

Additionally they are all in on Path B: take over the world, with >8,300 employees and an ambitious plan to have over 1000 young AI minded interns this year.

In this excellent interview with the supercool Sam Gregg-Wallace (@sjgreggwallace) the head of Talent at Shopify and the epic Peter Yang (@petergyang) they discuss how Shopify is maximally doubling down on engineering efficiency by innovating in org structure and roles.

Shopify has dismantled the traditional corporate ladder to create a mastery system that elevates the role of individual contributors to be as or more important than managers.

Sam explains how the company focuses on hiring and retaining the best crafters in the world who are passionate about the quality of the product.

He also says the company culture encourages a low distraction environment where crafters can cook. This means removing internal obstacles, such as excessive meetings and document writing, so that individuals can focus on prototyping and shipping code.

A key aspect of this philosophy is to hire high agency people who give a sh*t about their work and the company's mission.

Sam says:

Let crafters cook, right? What is the biggest slowdown is, seeking internal alignment or writing docs; instead of just doing the work prototyping and shipping code.

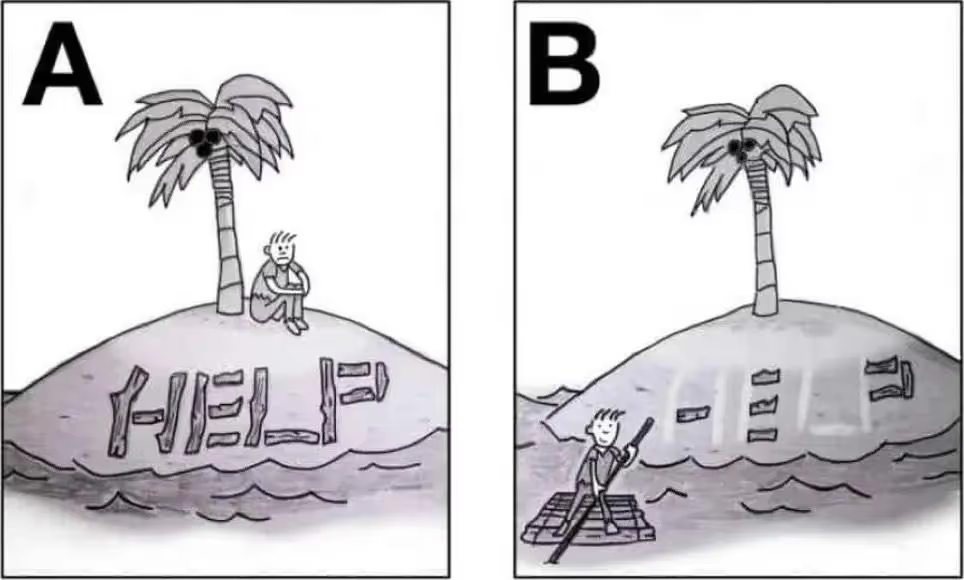

I saw a tweet from Arthur MacWaters (@arthurmacwaters) the Founder of Legion Health which sums it all up in a meme.

Looking for engineers who can do this pic.twitter.com/B3qGEQt5oP

— Arthur MacWaters (@ArthurMacwaters) June 30, 2025

The sub-text is clear. People want self managing employees, people with high agency. Dogs that walk themselves, not people who need to be managed on a leash!

What is High Agency?

If there is one thing that is bifurcating workers with Agentic Engineering and AI tools its having high agency.

If you haven't already, I highly recommend reading George Macks (@george__mack) amazing blog on High Agency.

This was also recently covered by the Wall Street Journal when they reported on an excellent essay Agency is Eating the World by Gian Segato (@giansegato).

Gian says:

It's the willingness to act without explicit validation, instruction, or even permission.

And:

True agency involves defiance, improvisation, instinct, and often irrationality.

I'm no expert in traditional management structures but acting with irrationality in defiance and without permission is likely not in the S&P 500 playbook.

In his book Blitzscaling, Reid Hoffman (@reidhoffman) calls this the Marine. The engineering architype you can drop behind enemy lines with minimal contact and have them open up new fronts and execute impossible missions.

the marines take the beach, the army takes the country, and the police govern the country. Marines are start-up people who are used to dealing with chaos and improvising solutions on the spot.

If I had to guess; this is probably a cyclic thing; when new frontiers open up, order and structure give way to chaos as pioneers who flourish in uncertainty move quickly to capture all the newly unlocked low hanging fruit.

A Hiring Heuristic

In an excellent interview with Daniel Gross (@danielgross) (no longer on Youtube) titled: Why Energy is the Best Predictor of Talent

He says a simple heuristic for hiring during interviews is to ask himself:

Would I work for this person?

Apparently so does Mark Zuckerberg in a previous interview with Lex Friedman as cited here.

you should only hire someone to be on your team if you would be happy working for them in an alternate universe

Maximally Deployed

It doesn't stop with talent recruitment, Shopify are so far ahead of everyone else in AI first adoption their Head of Eng Farhan Thawar (@fnthawar) recently said in an interview they were using GitHub CoPilot (2 years) before it was available as a product.

Farhan goes on to explain that the biggest gains of adopting AI internally has actually been with non engineering staff using Cursor.

Sales teams are vibe-coding their own tools and Technical PM's are opening PRs for their own features.

He explains what High Agency means for job roles with:

We're not a swim lane company which means that we don't try to put people into these roles where you are like you're in product so only think about product or you're in engineering only think about like how the code is written or architecture.

We're very much just curious problem solvers and so something's broken we expect uh curious people to go and look at the problem even doesn't matter what their role is and try to solve it.

On deploying Agentic AI tools across the board he says:

We believe everyone should be writing software just like my sales team is and everybody becomes more productive.

With an important caveat:

should the PR be accepted by engineering? What we say is yes, you can submit a PR but you have to understand the code you are writing yourself before you submit

Farhan says team members use multiple different AI subscription tools and they have a leaderboard of who is using the most tokens.

There might be some teams and managers thinking, okay but this sounds expensive, we already have dozens of SaaS, now we have multiple different AI tools, ChatGPT, Claude and maybe Google Gemini, isn't that enough?

Farhan has a different perspective, he says:

If I could give you a tool that could make your engineering team more productive by even 10%, would you pay for it? The answer is yes. Would you pay $1,000 a month for it?

My hypothesis here is $1,000 a month is too cheap.

If you consider the overemployed people from above, $12k a year is not much to pay if you are getting some non trivial increase in headcount efficiency with no recruitment or fixed costs required.

On the topic of hiring and evaluations Farhan says, all engineering roles including VPs and above are given coding interviews:

Yeah, because we believe that the best leaders here were ones who were not running away from coding

For interviews, Shopify expects you to use AI in the interview:

If they don't use a co-pilot, they usually get creamed by someone who does. So, they will have no choice but to use a co-pilot

Echoing Ethan's point about experts knowing when to use AI; and when and how to correct it, Farhan says during failed interviews:

They will try to prompt to fix it. Like... just change the one character and they won't... and they won't change it.

Farhan sums up their AI first hiring philosophy with:

I want you to come to work with an LLM and a brain, not one or the other.

Finally, farhan also alludes to a powerful realisation that adopting other peoples tools means adopting their way, so if you need to forge your own innovative path you must make your own tooling.

first you make the tool and then the tool makes you

Answer Dot AI - An R&D First Org

I watched a great podcast a few weeks ago by the illustrious Jeremy Howard (@jeremyphoward) and Matt Turck (@mattturck) and noticed the way Jeremy described Answer.AI mirrored a lot of advice Ethan has above.

It would seem that Answer.AI is all about Path A: small team efficiency; and he doesn't just have a makeshift internal lab, Answer.AI IS an R&D lab.

In the interview Jeremy talks about how they have built internal tools for dialogue engineering that multiply the efficiency of their own teams, such that they don't need to grow.

In fact in Jeremy's own words: growth is a failure case because limiting team size forces them to be extremely efficient. So much so, they do not have professional managers or traditional roles.

What's Old is New

Much like Ethan Mollick is urging leaders to innovate in their org structure, Jeremy has taken a leaf out of Thomas Edison's book and his infamous lab in Menlo Park.

Menlo Park had a team of "tinkerers" who experimented with new technology (electricity) to see what could be made.

In this environment the invention of light bulbs, dynamos, phonographs and early motion pictures were created. This wasn't just Science this was Art!

Jeremy explains how Answer.AI acts in a similar R & D style. They operate on a tight cycle where development drives research.

As they try to build a product, if they hit a constraint, they perform the necessary research to overcome it; which in turn opens up new green fields, which in turn leads to new potential products.

Instead of scaling by hiring more people and creating departments, Answer.AI are building a "substrate" of AI, automation, and internal tools. This substrate, which includes their development platform Solve it and web framework fasthtml is what allows them to get faster and give themselves superpowers.

To Ethans point above about KPIs, what KPIs would Thomas Eddison have given his R&D Staff? I'd love to hear Jeremy's answer to that one.

Most relevant to this post, Jeremy talks about how Answer.AI has a secret weapon internal tool the aforementioned Solve it (Jeremy i'd love an invite to try it) which he says blends the best worlds of:

- cursor

- jupyter

- claude code

- chatgpt

☝️ Can you imagine that? 🙊

Until I used Claude Code with Tokamine Mode I didn't really appreciate how powerful this kind of tool will actually be, but now I fully get it. Every time I jump over to Jupyter I find its lack of AI tooling to be extremely jarring and it really kills my flow.

Whoever manages to combine these tools into the next killer workflow will be the Microsoft Office of the 2030's.

Answer.AI's core belief is that the optimal approach to AI Productivity software is a close, interactive collaboration between humans and AI.

It sounds like Jeremy and Farhan agree:

first you make the tool and then the tool makes you

Jeremy is also acutely aware of the critical importance pointed out by Karpathy in his Software 3.0 video of creating tooling and outputs for LLMs (People Spirits) not just people.

That is why he created llms.txt a proposal for standardizing a kind of AI friendly home page on websites for AI systems.

Cursor maintains Hacker Energy

In this interview Going Beyond Code, Superintelligent AI Agents, And Why Taste Still Matters with Ycombinator host Garry Tan (@garrytan) and Michael Truell (@mntruell) they discuss how Cursor started and how they have structured their organization for maximum effectiveness.

Michael talks a lot about what Cursor (company Anysphere), potentially the first AI unicorn since the new AI tooling wave (they kind of started it) does for hiring and managing staff.

Firstly, he points out the co-founders, including the CEO, are all technical and continue to write code to maintain Anysphere's founding "hacker energy" even as it scales.

The culture is built on a foundation of intense product focus, experimentation, he says, and blends both coordinated and independent work.

While there are:

big projects that require a lot of coordination amongst people where you need top down alignment

They are also committed to being a place that fosters:

a good degree of bottoms up experimentation too

Michael says to encourage this bottom-up innovation, they have a specific structural approach where they:

explicitly take teams of engineers, sectioning them off from the rest of the company and kind of just giving them carte blanche to experiment on what they'd like.

This echos much of what Ethan, Jeremy and Sam have said above.

A core part of their product development process is a relentless focus on dogfooding their own tool:

the kind of main thing that we really acted on was just we reload the editor

Throughout the interview Michael emphasizes the importance of hiring the right people and maintaining that "hacker energy" through experimentation and a focus on passionate individuals.

On hiring he cautions to:

really nail the first 10 people to come into the company they will both accelerate you in the future

He says these initial employees act as keepers of holding the bar really high, the opposite of which is often called the "Bozo Effect".

Michael says they seek out generalist polymaths who are fantastic at building and shipping production code quickly.

The ideal candidate is someone who can bleed across disciplines being product minded, commercially minded but had actually trained models at scale.

The Bozo Effect

Popularized by Guy Kawasaki (@guykawasaki)

and explained in this blog post by Arani Satgunaseelan from Vollardian:

Steve believed that A players hire A players—that is people who are as good as they are. I refined this slightly—my theory is that A players hire people even better than themselves. It’s clear, though, that B players hire C players so they can feel superior to them, and C players hire D players. If you start hiring B players, expect what Steve called 'the bozo explosion' to happen in your organization.

Google is So Back

You might be forgiven for thinking this kind of organizational innovation is only for new smaller orgs, but thats not true.

As of this writing, Google has also dropped their own CLI tool gemini-cli.

However while there are mixed reports as to how much better gemini / gemini-cli are to Anthropics claude code when it comes to productivity and value, one thing is for sure:

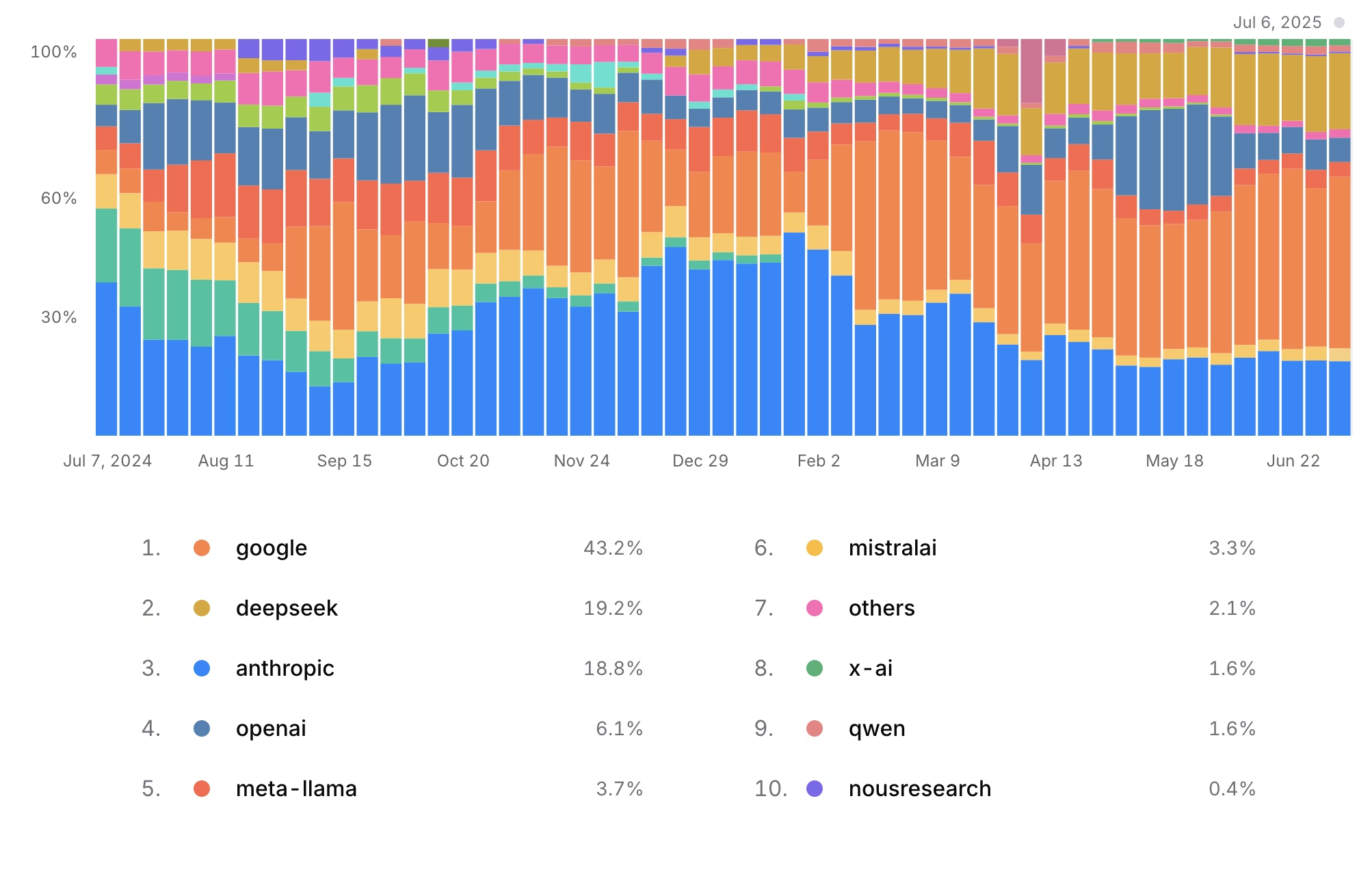

API Market Share is Google's

OpenRouter recently updated their rankings of token market share, and Google holds a staggering 43.2% of all tokens processed by OpenRouter.

To Jeremy's point in the above interview, people really care about speed and price; and it appears that is where Google is dominating.

As Karpathy puts it in Software Is Changing (Again), LLMs are now utilities like electricity. Imagine being a business today that doesn't use electricity? Now imagine being a business who introduced electricity before their competitors.

Turning the Titanic

One of the largest culture shifts in history seems to have been taking place internally at Google under Sundar Pichai and Sir Demis Hassabis.

I have been following the awesome Google Principal Engineer Jaana Dogan (@rakyll) for a while on Twitter and recently she seems to be talking alot about how Google has internally rebooted into a totally different org.

So what has Jaana been saying that mirrors Ethan and the 3 other orgs above?

Just see some of these banger tweets from Rakyll:

The overall velocity of software has visibly changed in the last two years. I don't think our existing ways to structure companies work anymore. The cost of building and throwing away is minimal.

— Jaana Dogan ヤナ ドガン (@rakyll) February 14, 2025

You don't really need a lot of engineers to build. You don't need a lot of managers to track. You have to let people do what they think valuable, and connect the dots later. Large companies need to learn how to become better incubators.

— Jaana Dogan ヤナ ドガン (@rakyll) 14, 2025

☝️ And that was in February!!

People last year: Google has completely missed the AI race, no way to flip things around.

— Jaana Dogan ヤナ ドガン (@rakyll) June 27, 2025

People this year: Google has some of the strongest assets in AI. Transformations in this space is actually exponential.

I have to say between things like Notebook LM and Gemini 2.5 Pro (that context window 😍), Open Source Gemma models and now gemini-cli, it does very much feel like "We're so back"!

If Google can do this, then anyone can...

Hey Apple...AMD...? Wazzup!?

Delegation versus Top Down Control

I think this all points to a shift in how people need to organize themselves going forward.

Michael Timothy Bennett (@mitibennett) an AI and consciousness researcher (and Australian 💪) recently talked about something interesting in an excellent podcast "How to build conscious machines" on Michael Levins channel.

Simplicity Doesn't Restrict Functionality

Looking to explain why simplicity in nature doesn't appear to restrict functionality (you don't need complex systems to be effective), he found an interesting example from the Bosnian war in the 90's.

There were two different military groups, the Dutch and the Nordic forces which had significantly different organizational structures.

- The Dutch forces had a rigid top-down organizational structure, very rulebound:

Everything had to be run all the way back up the food chain to headquarters for every decision. Um and as a result, it was very maladaptive.

They couldn't adjust to situations on the ground.

The consequences were a very notorious failure that resulted in the Srebrenica massacre (the worst mass killing in Europe since World War II) in which politicians lost their jobs.

- The Nordic forces had a culture of what's called mission command:

Everything was delegated as much as possible to the soldiers.

The individual soldiers were informed of the larger objectives and expected to bend regulations and rules in favor of uh those sort of larger objectives.

👆 expected to bend regulations and rules - sounds high agency AF

As a result they avoided many such problems of their Dutch counterparts.

Michael suggests that adaptation requires delegation of control. He draws parallels to biological systems where control is distributed across different levels of abstraction, from cells to organs to the entire organism.

In the lecture he talks about processes like homeostasis and self repair requiring this kind of delegated control because a centralized command can't manage every cellular level decision.

Its not to say that the Dutch way doesn't work under certain conditions.

When Ried Hoffman says police govern the country he's talking about ridgid structures designed intentionally to be inflexible but stable over long periods of time. These patterns are best utilized once a market is captured and a moat deployed. That playbook has served companies pretty well up until the 2010's.

But since everything has just changed, and there is no moat; it's adapt or die.

After listening to Ethan and people talking about promiment players in the AI / Tech space its clear that Dutch style slow top-down control, traditional roles, management layers and 1x productivity are a thing of the past.

How many businesses will survive this pivot to AI first, and how many will be crushed by innovative start-ups full of high agency, technically competent; AI first individuals?

Either way, I have no doubt we will read about it in real time on X. 😭

Stay Tuned

Stay tuned for Part 3, where i'll wrap this up by collating advice for Startups, Orgs, Managers, Engineers and anyone who wants to start their vibe-coding journey!

Madhava Jay (@madhavajay)List of People to Follow on Twitter

Academics, Founders, Hackers, Leaders, and Agents of Change:

Ethan Mollick (@emollick)

Yegor Bugayenko (@yegordb)

Deedy (@deedydas)

Gergely Orosz (@GergelyOrosz)

Sam Gregg-Wallace (@sjgreggwallace)

Peter Yang (@petergyang)

Arthur MacWaters (@ArthurMacwaters)

George Mack (@george__mack)

Gian Segato (@giansegato)

Reid Hoffman (@reidhoffman)

Daniel Gross (@danielgross)

Farhan Thawar (@fnthawar)

Jeremy Howard (@jeremyphoward)

Matt Turck (@mattturck)

Garry Tan (@garrytan)

Michael Truell (@mntruell)

Guy Kawasaki (@guykawasaki)

Jaana Dogan (@rakyll)

Armin Ronacher (@mitsuhiko)

Michael Timothy Bennett (@mitibennett)

Madhava Jay (@madhavajay)

Media Links

- Every leader needs this AI strategy (Strange Loop Podcast) – Ethan Mollick

- Shopify - Sam Gregg-Wallace & Peter Yang

- Farhan Thawar on Shopify engineering & AI

- Jeremy Howard & Matt Turck – Answer.AI

- Cursor interview: Going Beyond Code, Superintelligent AI Agents

- Daniel Gross – Why Energy is the Best Predictor of Talent (Podcast on Apple)

- Zuckerberg with Lex Fridman on hiring

- Michael Timothy Bennett on building conscious machines (Michael Levin’s channel)

- Karpathy Software 3.0